Fig. 1: Prototype of Sound Following Smart Microphone

Problem Statement:

Detect (Speaker) and Direct a Microphone.

Design a module which enables better communication by directing the microphone towards the current speaker.

Initial Proposition for the module:

The module has two microphones for localization fixed opposite to each other (15cm apart) and a third one which is the actual microphone to be directed to the speaker. This is fixed on a rotating column.

Problem Modelling:

Fig. 2: Flowchart for Source Triangulation

The problem solution consists of three stages:

1. Sound source detection:-

We use a simple sensor for detection of sound signal, in our case a simple diaphragm based microphone. Though a grid of sensors like multiple microphones can be used but to begin with we focus on solving the problem in an efficient way so we choose to use two microphones. In fact, this is the way our ears solve this problem. Later, it will be seen that the best solution is obtained by using a single microphone.

2. Acoustic Localization:-

The first task is to determine the direction of sound source, in our case the speaker in a conference. Since the microphone’s movement is around a fixed axis, it is sufficient to determine the angle of the sound wave’s propagation from a fixed axis in a plane perpendicular to the axis of rotation. This simplifies the case in which we have to determine the direction in a 3-D space.

Fig. 3: Image showing Microphone Sound Cones

3. Moving the microphone:-

Once the location of sound generated is determined, we start to move the main microphone in its direction. This is not a one step process. The mic is moved in stages at a certain angle (say 10º). After this, the whole process is repeated from finding the source and moving the mic by 10º. Thus the mic is moved in stages. This methodology reduces the probability of error and we can be sure of the speaker’s position.

Design possibilities for Acoustic Localization

1. Intensity variation at the detection points

The basic property that intensity of sound decreases with distance will be exploited here. Hence the intensity will be different at the two detection mics. The one detecting higher intensity has the source closer to it.

Shortcomings:

However this method fails for usage in practical purposes. This is because the difference in intensity detected at the two mics is quite nominal, since the separation between them is very less (<15cm)

2. Phase difference at the detection points

Since wave has to travel different distances to reach the 2 mics thus there will be a phase difference of sound signal at the two points. This phase difference forms ground for difference in location.

Shortcomings:

The problem here is the complexity of sensors to be used for phase difference detection.

3. Arrival time at the detection points

With the two mics system we have, the sound signal will take different time to reach the two mics. Thus, there is arrival time difference of the signal for the two mics. The more this time gap the more the signal is “tilted” towards that microphone. One way is to successively do this process after moving the microphone by some small angle. This process continues until microphone is aligned with the signal. The other way is to actually calculate the angle by the time difference and appropriately rotate the microphone.

Shortcomings:

The problem here is that there is substantial difference in time of arrival at the first instant (just after sound generation). Afterwards, sound signal is always present at both the detections points because the speaker is speaking continuously. So no difference can be detected after the first instance.

Thus after careful considerations, the final solution accepted is as follows:

Solution chosen:

Using a single high sensitivity directional microphone

· This solution exploits the basic principle of working of radar.

· We use a high sensitivity directional microphone mounted on a rotating platform.

· This platform rotates continuously taking periodic samples and sending them to the MPU (Main Processing Unit).

· The sound samples taken are compared with respect to their energy content and the maximum is computed.

· Finally, the microphone is directed towards the speaker.

Implementation:

The implementation is divided into 2 major segments:

1. Using digital implementation

2. Replacing various blocks with their analog equivalents and thus making an analog based solution.

Proof of Concept

Proof of Concept:

Fig. 4: Flowchart for implementation of sound triangulation

Basic Block Diagram (For digital implementation)

Fig. 5: Overview of digital implementation of sound triangulation

Gathering Sound Signals:

· For this we are using a high sensitivity directional microphone (such as a collar mic).

· Since the microphone has a normal 3.5mm jack (male connector) interfacing with it was not an issue, we just connect it using the female connector present in our PU (Processing Unit) which is the laptop. (your normal audio jack)

· This microphone has no ADC units in it, and thus it only functions to give analog (here sound) signals.

Fig. 6: Typical Image of Microphone

Finding energy content of samples:

· The mic is mounted on a platform that is rotated with the help of servo motor attached at the bottom.

· We have programmed the motion of the servo so that it moves 20º every second. Now since the servo can move only 180º, thus the servo will move 9 times taking 9 seconds in total. Note, this solution works for a sample space of 180º and not a complete circle.

· This divides the experiment space into 9 sectors

· A sound sample (of 1 second) is taken in every sector.

· Hence we have 9 samples to work upon and compute the maximum.

· These 9 samples are taken in MATLAB.

· Here the sound object is converted into digital samples and is stored in matrix form

· The energy is calculated by multiply each sample’s matrix with itself.

· A comparison is made and the maximum is found.

· Probabilistically, the sector with maximum energy is closest to the speaker and thus we have localised the sound source.

· Using serial communication this value (sector number) is send to the microcontroller

Fig. 7: Typical Image of Servo Motor

Moving the Servo to the speaker

· Based on the location of the sector with maximum energy of the speech sample, appropriate angle is calculated for the servo motor

· For example:

o The sector value will lie between 1 to 9

o Suppose the desired sector is 7

o Angle = (7 * 20)

= 140º

· Thus the motor has to move by 140 degrees to reach the speaker

· The whole process is repeated continuously to find the speaker in the whole conversation.

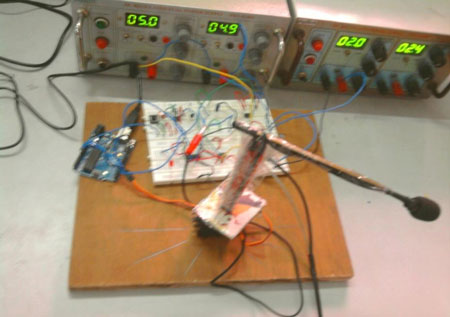

Prototype

Prototype:

Fig. 8: Image showing Mic Assembly for Sound Following Smart Microphone

Implementation Phase II:

With the above digital solution it can be concluded that the solution chosen works quite efficiently to solve the given problem.

Block Diagram:

Fig. 9: Block Diagram of Sound Following Smart Microphone

*The value of various components can be seen from the diagram above.

Methodology:

· The sound signal through the microphone is given to a de-noising filter to remove the noise frequencies of the signal.

– This removes the ambient noise of the environment.

· The above output is supplied to an amplification stage where an inverting amplifier amplifies the sound signal by a factor of 50.

· The amplified signal is compared with an adjustable voltage threshold which is determined by experimental observations.

– This threshold should be increased if there is more noise in the environment to accurately determine the direction of the sound signal.

· The above output is NAND-ed with HIGH to give inverted output.

· The output from the previous stage has a very less time period not sufficient to drive the motor. Therefore, we come around this problem by using the signal as a trigger to a monostable multivibrator which gives a pulse of sufficient time period (adjustable).This is sent as a control signal for the motor.

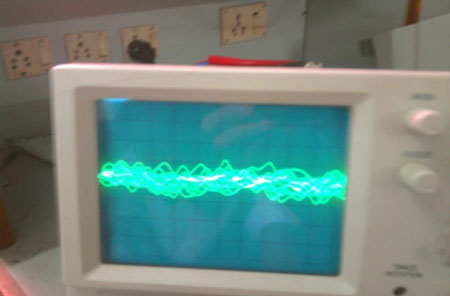

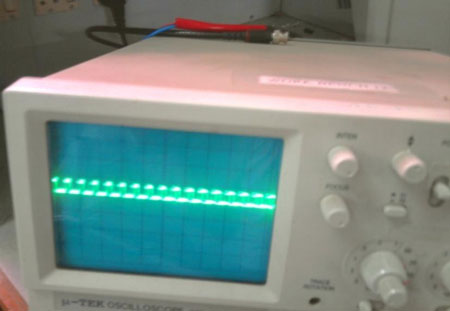

Observations:

The figure below shows the output of the CRO, showing the amplified sound signal.

Fig. 10: Image showing sound signals observed on CRO

The original signal, after the de-noise filter is in the order of 10-15mV. The amplification stage (inverting) brings it to the order of 0.4-0.5V. This level of amplitude can be safely used for the processing ahead.

The next image shows the output of the signal after the comparator stage. It remains at the level LOW, when the sound is not directly into the cone of the mic. But, as soon as it is aligned with the source, that is, the sound has exceeded the threshold, the output becomes HIGH.

Fig. 11: Image showing trigger signals on CRO

The Time period of the pulse is very low, (~10ns). This cannot be used to drive the motor. Hence, we use a monostable multivibrator to generate a pulse of suitable time period using the previous signal as a trigger.

Results:

The pulse from the multivibrator is sent to a pin in the microcontroller. When this pulse is high, it means that the source has been localised. Thus the motor is stopped for a fixed period. (can be modified according to our need)

Final Product:

The microphone is mounted on top of a light-weight stand which in turn rests on the servo. The servo is fixed on the base. The image below depicts it all.

Fig. 12: Prototype of Sound Following Smart Microphone

Applications:

• In video conference systems it is often useful to find who is speaking and direct the microphone to that person. This project aims to design such a system.

• The system constructed if improved can follow a sound source for its effective recording.

Circuit Diagrams

Filed Under: Electronic Projects

Questions related to this article?

👉Ask and discuss on Electro-Tech-Online.com and EDAboard.com forums.

Tell Us What You Think!!

You must be logged in to post a comment.