Neural Networks have become very famous topic of interest since last few years and are being implemented in almost every technological field to solve wide range of problems in an easier and convenient way.

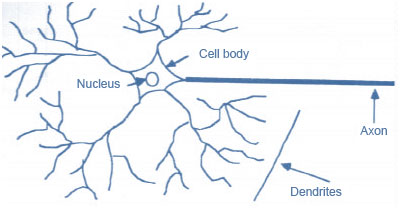

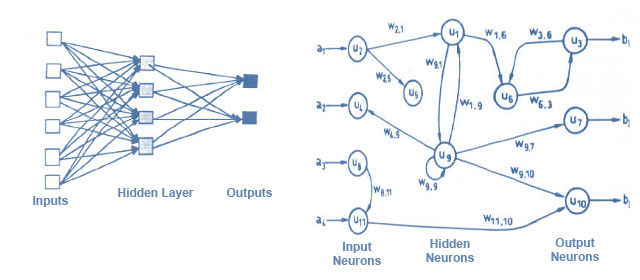

Fig. 1: A Representational Image Of Neural Networks

Such a great success of neural networks has been possible due to their sophisticated nature as they can be used with ease to model many complicated functions.

Neural Netrworks are considered to be a prominent component of futuristic Artificial Intelligence. Currently the phrase Neural networks is synonymous with Artificial Neural Networks (ANNs) whose working concept is similar to that of Human Nervous System, and hence the name.

In Human body, Neural Networks are the building blocks of the Nervous System which controls and coordinates the different human activities.

Neural network consists of a group of neurons (nerve cells) interconnected with each other to carry out a specific function. Each neuron or a nerve cell is constituted by a cell body call Cyton and a fiber called Axon.

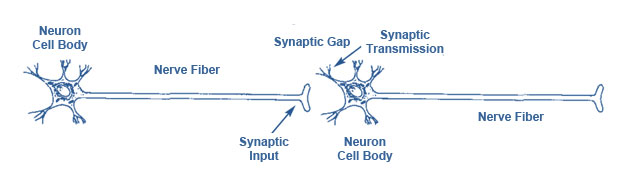

Fig. 2: An Image Of Neuron In Human Body

The neurons are interconnected by the fibrous structures called dendrites by the help of special gapped connections called synapses. The electric impulses (called Action Potentials) are used to transmit information from neuron to neuron throughout the network.

Fig. 3: Neuron To Neuron Signal Transmission In Human Body

Artificial Neural Networks (ANNs) have been developed based on the similar working principle of Human Neural Networks.

Artificial Neurons are similar to their biological counter parts. The input connections of the artificial neurons are summed up to determine the strength of their output, which is the result of the sum being fed into an activation function, the most common being the Sigmoid Activation Function which gives output varying between 0 (for low input values) and 1 (for high input values). The resultant of this function is then passed as the input to other neurons through more connections, each of which are weighted and these weights determine the behavior of the network.

An Artificial Neural Network (ANN) is basically an information processing system composed of a large number of interconnected processing elements (neurons) working in an integrated manner to solve specific problems. An ANN is devised for specific applications, such as pattern recognition or data classification, through a learning process.

Historical Background

The history of neural networks dates back to 1943 when McCulloch and Pitts developed some simple models of neural networks as binary devices with fixed thresholds to implement simple Boolean logic functions.

In 1958, Rosenblatt developed a system called as Perceptron consisting of three layers with the middle “Association Layer” and the system could learn to associate a given input to a random output unit.

Widrow and Hoff of Stanford University developed a system called ADALINE (ADAptive LInear Element) in 1960 which was an analog electronic device and was based on the Least-Mean-Squares (LMS) learning rule.

The growing popularity of Neural Networks led to many other developments like, the principle of Heterostasis by A. Henry Klopf in 1972, the Back propagation learning Method by Paul Werbos in 1974 and COGNITRON , the step wise multilayered and trained neural network to interpret handwritten characters developed by F Kunihiko in 1975.

Since then, significant advancements have been accomplished in the field of neural networks.

Purpose of using neural networks:

Following advantageous features of neural networks have made their use extensive:

- Adaptive learning: The capacity to learn how to do tasks based on the data provided.

- Self-Organizing Ability: An ANN can organize or represent the information by itself, which it receives during learning time.

- Real Time and Parallel Operation: Multiple ANN computations can be carried out in parallel.

- Fault Tolerance through Redundant Information Coding: Partial destruction of a network leads to the corresponding degradation of performance. However, some network capabilities may be retained even with major network damage.

Comparative Study

Neural networks and conventional computers: A comparative distinction

Conventional computers employ specific algorithms to solve particular problems whereas neural networks learn by experience and example and cannot be programmed or fed with any particular algorithm to perform a specific task.

Due to inherent self-learning nature of neural networks, their activities can be sometimes unpredictable and unexpected, whereas on the contrary, the activities of conventional computers is totally predictable due to their cognitive approach of problem solving.

Human Neurons versus Artificial Neurons

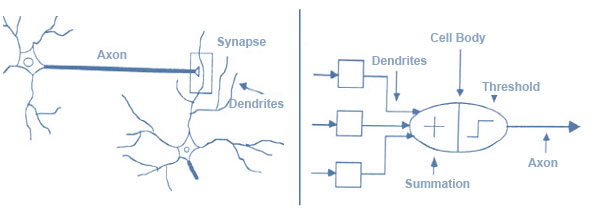

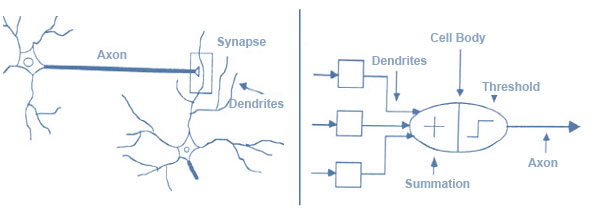

Fig. 4: Image Comparing Human Neurons With Artificial Neurons

An artificial neuron is a multi input and single output device having two operation modes- the training mode and the using mode.

The neuron is trained to fire (or not), for particular input patterns, in the training mode.

In the using mode, when a taught input pattern is detected at the input, its associated output becomes the current output. If the input pattern does not belong in the taught list of input patterns, the firing rule is used to determine whether to fire or not.

Firing rules

The firing rules determine the sequence of activities exhibited by neural networks. These rules decide whether a neuron should fire (respond) for any input pattern and include every input pattern.

An Illustration: Pattern Recognition

Pattern recognition is one of the most important applications of Neural Networks.

Pattern recognition can be implemented by using a feed-forward neural network (fig 1). During training phase, the network associates outputs with input patterns. When the network is used, it tries to give the associated output pattern at its output by identifying the input pattern.

If a neural network is given an unknown pattern (having no associated output pattern) at its input, the network gives the output that corresponds to a taught input pattern that is least different from the given pattern.

Fig. 5: Diagram Of Pattern Recognition Implementation Using Feed-Forward Neural Network In Neuron

The network of figure 1 is trained to recognize the patterns T and H. The associated patterns are all black and all white respectively as shown below.

Fig. 6: Figure Shows Output Pattern Associated With Top Neuron

If we represent black squares with 0 and white squares with 1 then the truth tables for the 3 neurons after generalization are:

|

X11:

|

0

|

0

|

0

|

0

|

1

|

1

|

1

|

1

|

|

|

X12:

|

0

|

0

|

1

|

1

|

0

|

0

|

1

|

1

|

|

|

X13:

|

0

|

1

|

0

|

1

|

0

|

1

|

0

|

1

|

|

|

OUT:

|

0

|

0

|

1

|

1

|

0

|

0

|

1

|

1

|

Fig. 7: Truth Table For Top Neuron In Pattern Recognition

|

X21:

|

0

|

0

|

0

|

0

|

1

|

1

|

1

|

1

|

|

|

X22:

|

0

|

0

|

1

|

1

|

0

|

0

|

1

|

1

|

|

|

X23:

|

0

|

1

|

0

|

1

|

0

|

1

|

0

|

1

|

|

|

OUT:

|

1

|

0/1

|

1

|

0/1

|

0/1

|

0

|

0/1

|

0

|

Fig. 8:

Truth Table For Second Neuron In Pattern Recognition

|

X21:

|

0

|

0

|

0

|

0

|

1

|

1

|

1

|

1

|

|

|

X22:

|

0

|

0

|

1

|

1

|

0

|

0

|

1

|

1

|

|

|

X23:

|

0

|

1

|

0

|

1

|

0

|

1

|

0

|

1

|

|

|

OUT:

|

1

|

0

|

1

|

1

|

0

|

0

|

1

|

0

|

Fig. 9: Truth Table For Third Neuron In Pattern Recognition

From the tables it can be seen the following associations can be extracted:

Fig. 10: Figure Shows Output Pattern Associated With Middle Neuron

In this case, it is obvious that the output should be all blacks since the input pattern is almost the same as the ‘T’ pattern.

Fig. 11: Figure Shows Output Pattern Associated With Third Neuron

Here also, it is obvious that the output should be all whites since the input pattern is almost the same as the ‘H’ pattern.

Here, the top row is 2 errors away from the T and 3 from an H. So the top output is black. The middle row is 1 error away from both T and H so the output is random. The bottom row is 1 error away from T and 2 away from H. Therefore the output is black. The total output of the network is still in favor of the T shape.

Architecture and Learning Process

Architectural Overview of neural networks

Based on architecture, neural networks are basically of two types:

1. Feed-forward networks

Feed-forward ANNs (fig 2) allow only unidirectional signal transmission, i.e., from input to output, without any feedback loop. Also called as bottom-up or top-down.

Application: Pattern Recognition

2. Feedback networks

Feedback networks (fig 3) allow signals to travel in both directions by introducing loops. These networks are very powerful, get extremely complicated and dynamic because of their continuous change of state before coming to equilibrium.

Fig. 12: Image Showing Architectures In Nueral Network

Brief Explanation of the Learning Process

The neural networks recognize patterns and respond subsequently in following two ways.

1. Associative mapping refers to the process of learning to produce a particular pattern on the set of input units.

2. Regularity detection in which units learn to respond to particular properties of the input patterns.

The relationships among patterns are stored in case of Associative learning, whereas, in regularity detection the response of each unit has a particular ‘meaning’.

Behavior of an Artificial Neural Network: Its Transfer Function

The transfer function typically falls into one of three categories:

· linear (or ramp) (output proportional to total weighted input)

· threshold (input compared with a predetermined threshold value and the output is decided accordingly)

· sigmoid (output varying continuously, but not linearly with input changes)

ANN Applications

Neural Networks in Real Time Applications

Wherever a relationship between a set of independent inputs (PREDICTORS) and dependent outputs (PREDICTED VARIABLES) exists, there the neural networks find a wide range of implementations.

Some of the real time applications of neural networks include:

- Monitoring and Detection of various medical phenomena like Heart rate, blood pressure, respiration rate, etc

- Predictions for fluctuations in Stock Market based on several factors like past data, economic factors, etc.

- Banking activities like validation of a customer, disbursement of a loan, etc.

- In Industrial Monitoring of conditions of equipments.

- Sensor based applications

- Sales Forecasting

- Industrial Process Control

- Customer Research

- Risk Management

Some specific applications include: recognition of speakers in communications, diagnosis of hepatitis, recovery of telecommunications from faulty software, interpretation of multimeaning Chinese words, undersea mine detection, texture analysis, 3-D object recognition, hand-written word recognition and facial recognition.

Conclusion

The ever progressing e- world has a lot to procure and gain from neural networks. Their ability to learn by situation counts for their flexibility and power.

The neural Networks also don’t need any specific algorithm to do a specific task, i.e. there is no need to understand the internal mechanisms of that task. They are also well suited for real time systems because of their fast response and computational times which are as a result of their parallel architecture.

Neural networks also contribute to other areas of research such as neurology and psychology. They are regularly used to model parts of living organisms and to investigate the internal mechanisms of the brain.

So these networks play a very significant role in the technological advancements and problem solving approaches.

Filed Under: Articles

Questions related to this article?

👉Ask and discuss on Electro-Tech-Online.com and EDAboard.com forums.

Tell Us What You Think!!

You must be logged in to post a comment.