A team of MIT researchers has reported success in using a soft robotic arm that “understands” its configuration in three-dimensional space. To do so, the robot leverages position and motion data from its own “sensorized” skin. Soft robots are recommended as more resilient, adaptable, safer, and bio-inspired alternatives to more conventional rigid robots. The soft robots use a compliant material similar to the one present in living beings.

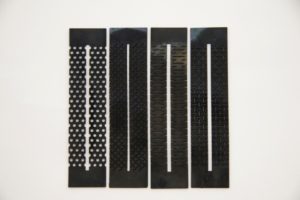

The researchers’ soft sensors are conductive silicone sheets cut into kirigami patterns. They have “piezoresistive” properties, meaning they change in electrical resistance when strained. As the sensor deforms in response to the robotic arm’s stretching and compressing, its electrical resistance is converted to an output voltage that’s then used as a signal correlating to that movement. (Source: Ryan L. Truby, MIT CSAIL)

However, giving autonomous control to these soft robots is extremely challenging because it should be able to move in infinite directions at any given point of time. This increases the level of complexity associated with developing the control and planning models, which drive automation.

Typical automation control techniques use large networks of motion-capture cameras that track and direct a robot’s 3D positions and movements. This approach is cumbersome and nearly impossible with real-world movements.

As an alternative, the MIT researchers working on the project covered their robot with a system of sensors that collect all needed information about position, direction, and movement. The collected information is then sent to a unique deep-learning software model that filters out all noise and captures clear signals to estimate the 3D configuration of the robot.

The team demonstrated the validity of this new system by developing a soft robotic arm that can determine its position while stretching and swinging around. All sensors used for these robots were created with off-the-shelf materials, which means any lab can create them.

“We’re sensorizing soft robots to get feedback for control from sensors, not vision systems, using a very easy, rapid method for fabrication,” said Ryan Truby, a postdoc in the MIT Computer Science and Artificial Laboratory (CSAIL). “We want to use these soft robotic trunks, for instance, to orient and control themselves automatically to pick things up and interact with the world. This is the first step toward that type of more sophisticated automated control.”

The team aims to use this research in the development of artificial limbs that can move and act as naturally and as dextrously as possible.

Significance

It’s not impossible to make sensors from soft materials but a specialized design is required. This complicated the matters for many robotic labs that work on a limited budget and resources.

Truby explained the inspiration behind these sensor materials, “I found these sheets of conductive materials used for electromagnetic interference shielding, that you can buy anywhere in rolls.”

These conductive materials featured “piezoresistive” properties, which means their electrical resistance can be altered with additional strain. Truby realized that if these conductive materials were placed on specific points of the trunk, they could make effective soft sensors. As the trunk stretches and compresses, the sensors deform to convert their electrical resistance into an output voltage. The obtained voltage is then used as a signal symbolizing that specific movement.

However, the material is not very stretchable, so it of limited use in soft robotics. Nevertheless, Truby made most of it by laser cutting rectangular strips of conductive silicone sheets in different patterns like chain link fence and long rows of small holes. This enhances the flexibility of this material to the maximum.

The trunk

The robotic trunk developed by this team has three parts. Each part has four fluidic actuators that assist in movement. Each segment has a sensor fused in it that collects information from the embedded actuator underneath.

To bond the surface of one material with another, the team used a technique called “plasma bonding”. The benefit of this new technique is that it narrows down the total duration of sensor shaping to a few hours.

A milestone

True to the hypothesis, the sensors were successful in imitating the normal movements of the trunk. But it was a noisy process. To overcome this challenge, the researchers created a deep neural network that took care of all complexities and sifted meaningful feedback signals from the noise. It also helped in the soft robot’s configuration with the sensors.

The team also came up with a new model that described the soft robot’s shape kinematically, thereby, eliminating the need for different models for processing. During the experimentation stage, the trunk was allowed to swing around and stretch itself in various configurations for about 90 minutes. For the ground truth data, they used the traditional motion-capture system.

During training, the model processed and analyzed data gathered from the sensors to estimate the configuration. Then they compared these predictions with the ground data that was being gathered at the same time. During this process, the model learned to map signal patterns emerging from sensors with real-world configurations. The results showed that the estimated shape of the robot perfectly matched with the ground truth.

The work is not yet complete and the researchers are searching for better ways to improve sensitivity through new sensor designs. They also plan to develop new deep-learning methods and models to bring down the need to train every single soft robot that comes to life. Lastly, they hope to refine the system to fully capture dynamic motions.

Filed Under: News

Questions related to this article?

👉Ask and discuss on EDAboard.com and Electro-Tech-Online.com forums.

Tell Us What You Think!!

You must be logged in to post a comment.