Fig. 1: Image of Lexus RX450h Google Driverless Car

Driving a car is an art, maybe that’s why the government waits until you’re 18 years old (or 16 in some countries) to give you chance to earn a driver’s license. Not only should you be able to direct the car, but also have a keen sense of safety. Nevertheless, all the rules and regulations enforced to ensure safety fail to eradicate the occurrence of accidents. On a different note, the smaller details like the speed, gear changing, and fuel consumption can affect the environment around you in the form of emissions of gases. When more of fuel is burnt than required, there is more of incomplete combustion of gases causing the emission of gases like carbon monoxide (not to mention that you get lower mileage too). This happens at very low and very high speeds. Therefore driving a car at an optimum condition involves a balance of delicate forces. It requires good understanding and experience of the working of a car. But what if a team of brilliant engineers devised a way to automate this complicated process?

Automation

Fifteen years back, if you had to withdraw cash, you had to travel far to a bank and stand in a line with a slip to withdraw cash. But lately, the same is being done even in remote locations by machines (ATMs – Automated Teller Machines) without any hassle. In the industries, most of the heavy machinery is automated (fully or partially) allowing for more accurate and speedier production. Automation is a growing trend in the present era. It is truly an engineering marvel since it involves a union of the different branches of engineering like computer science, electronics and mechanical to devise this technology. Electronic sensors sense the surroundings and send their response to microcontrollers which are programmed to give a desired response and actuators which actually carry out the response action.

Google’s Project

We know them as the most popular search engine in the World Wide Web. They are much more than that. They pioneer in inspiring innovation in the field of technology which is beyond their time. The google driverless car is a project by google which involves the development of technology to make a car self-dependent. The software that google use to automate cars is known as the “Google Chauffeur”. They do not produce a separate car but install the required equipment onto a regular car. The project is currently being led by google engineer Sebastian Thurin (who is also the director of the Stanford Artificial Intelligence Laboratory and co-inventor of the google street view) under the aegis of Google X.

Fig. 2: Google Driverless Car Fitted in Toyota Prius and Audi TT

Google has equipped a group of ten cars with its tech consisting of three Lexus RX450h, One Audi TT and six Toyota Prius. The tests were conducted with expert drivers in the driver seat and Google’s engineers in the passenger seat. They have driven a great deal of distance in varied topographical locations and traffic densities in the United States of America. The speed limits are stored in the brain of the control systems and the car comes with a manual override which passes on the control to a driver in case of any malfunction. By August 2012, google announced that it had completed 500,000 km of road testing. As of December 2013, four states in the USA have established laws permitting the use of autonomous cars, California, Florida, Nevada and Michigan

How should it Work?

The aim of the project is to duplicate the actions of the ideal driver. So let’s first make a note of the variables at play when a human drives a car.

· The most important of senses is the sense of sight. The first data we encounter is that what we see around us. With this data we control acceleration and retardation of the car as per the visual data.

· Once we receive this data from our eyes, it is sent to the brain via optical nerve. The brain examines this data and determines if any action is required or not and if required, what action is required.

· The action data or the stimulus is sent to the hands and legs which control steering, gas pedal, brakes and clutch.

· After the action is applied, our eyes again observe the data and send to the data to the brain. The brain decides if what we see is what we desire and sends a correction data back to the limbs, this process is called “Feedback”.

Here we observe that the primary sense is sight or any way by which we are aware of the observable surroundings around us. For example, suppose we observe a person crossing the road and we are driving at a speed of 40kmph. If the distance between the car and the person is say 10m (small), we either apply sudden brakes or make a sharp turn to avoid hitting the person. If the person crossing the road is 100m away, we apply light brakes and reduce the speed so that the person crosses the road.

How does it Work?

Apply this principle to design an electronic control system and the result is an autonomous car. Although it sounds simple, the software and hardware interaction on a big system like a car is in actuality quite sophisticated. The accuracy and dynamic range required in such a system is high. These were the accomplishments of the winners of the $2million 2005 DARPA grand challenge for the robotic vehicle “Stanley”. Let us now take a look at how various sensors and controllers achieve this.

The primary device that monitors the environment is the “Laser Range Finder” (A Velodyne 64 beam LIDAR – for Light Detection and Ranging). The laser generates a detailed 3D image of what it observes around it. It measures the 3D environment and then compares it with high resolution maps of the real world. Laser range finders are similar to the ones found in the laser scanners but of a larger range and higher accuracy. These lasers must have a 3600 view of the surroundings and no optical hindrances (windscreens and mirrors). So the ideal location would be the roof of the car.

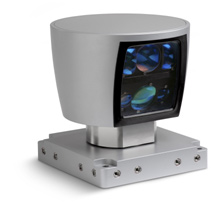

Fig. 3: Image of Velodyne 64 Beam LIDAR System

The car is also equipped with four radars which are meant to keep watch far enough (beyond the range of laser) so that the fast oncoming traffic can be detected. These are helpful in highways where fast moving traffic is prevalent and a keen sense of awareness is essential.

A camera is positioned on the rear view mirror facing forward. This is meant to sense the traffic signals. The data received from the camera is programmed to give outputs according to the received inputs which may be the red, yellow or green light.

A GPS (Global Positioning system) locates the latitude and longitude position of the car which is used to place it on a satellite map. The GPS is mainly used to set a predetermined course by the user. The data of the course directs the vehicle to follow a path required to reach the prescribed location.

An inertial measurement unit, measures the inertia force exerted on the vehicle. The wheels of the vehicle contain odometers which measure the speed of rotation of the wheels (RPM). The same data can be measured to calculate the load on the engine (i.e, the Brake Horse Power BHP). These sensors work collectively to monitor the speed and movements of the vehicle.

Fig. 4: Representational Image of a Typical Driverless Car

These sensors are responsible for collecting the data of the variables of the vehicle. The job of analyzing this data and producing a proper response is the job of “Artificial Intelligence”. This a field where human like intelligence is transferred to machines or software by computer science and electronics. This field is multi-disciplinary fields such as Computer Science, Neuroscience, Psychology, linguistics and Philosophy. It allows the device or software to take decisions based on certain inputs. Therefore the AI unit determines the following parameters based on inputs from the hardware and google maps

· How fast to accelerate the vehicle.

· When to slow it down or stop.

· When to steer the vehicle.

The goal of the Artificial Intelligence unit is to get the passenger to the destination safely and legally by following traffic rules and regulation. Google have already passed testing although there was one incident where there was an accident which involved one of Google’s autonomous car, for which the company claims that the car was being operated manually at the time of the accident. Is this the future of road travel? Or is google trying to reach an unreachable goal? Whatever the market may hold in store for google in the future, the technology has certainly lived up to the innovation and brand name of one the world’s largest tech companies.

Filed Under: Applications, Articles, Automotive, Tech Articles

Questions related to this article?

👉Ask and discuss on Electro-Tech-Online.com and EDAboard.com forums.

Tell Us What You Think!!

You must be logged in to post a comment.