October 6, 2009, Stockholm, It was a historic moment for digital cameras when their shutters opened for the fraction of a second to capture the committee, award the coveted Nobel Prize to the ones who invented the electronic eyes. Had it not been the duo Willard Boyle and George Smith, those cameras might still have been shuttering light on a photosensitive film and then drying them on rails in the dark room. By inventing the Charge Coupled Device and predicting its applications way back in 1969, Boyle and Smith opened up the domain of solid state devices for imaging and memory applications.

Fig. 1: An Image of Charge Coupled Device

Though Boyle and Smith invented the method to convert light into an electrical signal, the entire research would not have been possible had it not been Albert Einstein’s groundbreaking explanation of the Photoelectric effect. Einstein explained how materials could absorb incident radiation and the eventual knocking out of the electrons from the surface. Boyle and Smith studied how this incidence of light caused disturbances in electrons and how it could be utilized. They succeeded in doing this by grouping a number of capacitors into an array, something which makes up the pixels of a digital image.

Initially named as Charge Bubble Devices, the device’s operation as a shift register and a linear imaging device was recorded. It was based on a principle similar to Bucket-Brigade Devices, where charge is transferred from one capacitor to another along the semiconductor surface. Bell labs succeeded in building the first solid state video camera in 1970. By 1971, Michael F. Tompsett and other researchers at Bell Labs succeeded in capturing images with linear CCD arrays. After that, many semiconductor majors like Texas Instruments, Fairchild Semiconductors, Sony etc. started investing in CCD technologies. One of the first commercial CCD devices was built by Fairchild in 1974 which was a 100x 100 pixel device having about 500 CCDs array. The first CCD based reconnaissance satellite KH-11 KENNAN was launched in 1976. By 1983, CCDs had started to replace photographic plates in astronomical telescopes too. Companies like Kodak had been manufacturing CCD based professional cameras since 1985, but by 1995, cost effective high resolution CCD cameras started flooding the markets.

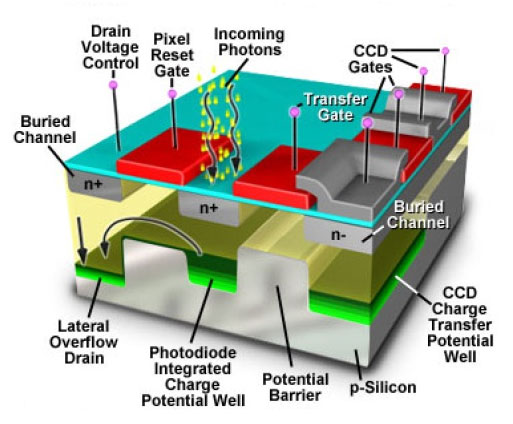

A CCD can be thought of as a subset of Charge Transfer Devices. These are based upon Metal Oxide Semiconductor (MOS) capacitors. Two types, viz surface channel and buried channel MOS capacitors have been used in CCD, but primarily buried channel capacitors have been used for manufacturing since these do not have problems caused by surface irregularities at oxide-semiconductor interface. A thin n-type buried channel is formed on a p-type substrate through ion implantation. The Silicon Dioxide insulator layer is grown on top of the n-region, and to complete the capacitor, gates of metal or heavily doped polycrystalline silicon are placed on top of the insulating SiO2 using CVD process. To isolate the charge packets of one column from other, thermally grown oxide ‘channel stops’ are placed parallel to the channels.

Fig. 2: Graphical Image of Buried Channel MOS Capacitor

But if a CCD cell were to pass along charges, it would just be any other MOS capacitor. The additional property that it is sensitive to light makes a CCD unique. The response to photons is through the epitaxial layers of doped silicon grown on the substrate. When photons are incident on the semiconductor surface, they dislodge electrons which create charge that is proportional to the light falling on the surface. A single CCD cell performs four functions:

1. Receive charge from the cell above it in the array.

2. Hold that charge for sometime without much loss.

3. Pass that charge to the cell below it in array.

4. Respond to outside stimulus like light and generate its own charge.

Elements & Working Contd.

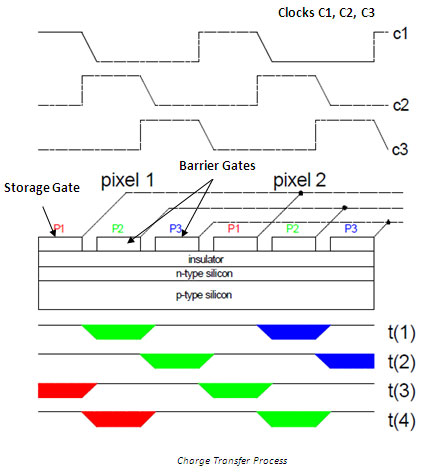

There lies the main challenge, to read out these charges. Many schemes named binning, two-phase, three-phase, four-phase etc. corresponding to the type of clocking used have been used for transferring the charge packets cell to cell in the bucket brigade style while protecting the integrity of each packet. Considering a 3-phase scheme, there would be 3 control wires passing each cell, each wire being connected to one phase of the clock. These wires control the height of the potential wells which pushes and pulls the charge packets along the line. For a three-phase scheme, each pixel contains one storage and two barrier gates. Each storage gate is connected to the same phase and sequentially, every barrier gate is connected to their respective phase clocks. A charge packet moves from one region into the next region when the second gate goes to a higher potential and the first gate goes low. This sequential movement of charges can be demonstrated as in the figure below.

Fig. 3: Graphical Figure of Sequential Movement of Charges in CCD

Attempts were made to use CCD devices as memory devices. But soon after, other techniques took over and after that CCD devices have been used primarily in scanning, microscopy and photography. One dimensional array has been utilized in flatbed scanners where one line is scanned at a time and the charges are read out after that. The array is moved mechanically over the entire page to create the 2 dimensional scanned images. Two dimensional arrays have been used to capture the entire scene in one go and then transfer the charges to the output.

Fig. 4: An Image of One Dimensional Array

Architecture

To carry out the read out from the CCD array, three architectures have been utilized:

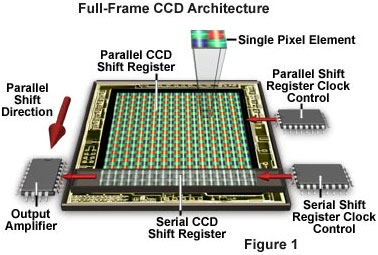

Fig. 5: Diagram Showing Full Frame CCD Architecture

1. Full Frame Read Out: The entire CCD array acts as an active area. This type of device uses mechanical shutter mechanism to stop light from reaching the element to avoid smearing when the charges are passed down the Vertical-CCDs in parallel and later serially moved out using the Horizontal-CCDs. This process is quite time consuming.

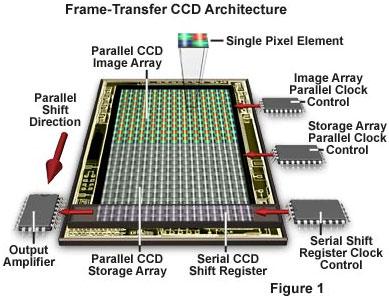

Fig. 6: Graphical Image Representing/Diagram Showing Frame Transfer CCD Architecture

Architecture Contd.

2. Frame Transfer: Half of the contiguous array area is used for exposure and the remaining half is opaque. The charges are transferred from the active area to the opaque area in very less time and then are read out from there. This process is faster than the full frame read out as during the time charges are being read out of the opaque area, the active area can be used for capturing new image. It also has an advantage of reduced smearing and light contamination while charge transfer. However, this has a disadvantage of using double the silicon area.

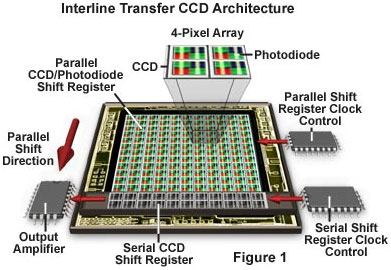

Fig. 7: Graphical Image Representing/Diagram Showing Interline Transfer CCD Architecture

3. Interline Transfer: In this type of architecture, each pixel has an active area and an opaque area adjacent to it. The charge is quickly transferred from the light sensitive Photodiode to the adjacent Vertical-CCD unit. This uses only one transfer cycle to hide the entire image thus allowing very high shuttering speeds and minimum smear. This too has the disadvantage of increased silicon estate, but modern advancements have tried to increase the quantum efficiency of the array by using microlenses which redirect the light away from the opaque regions. The use of microlenses has increased the fill factor to about 90% of the other architectures without compromising on the speed.

Working

The main performance parameters of a CCD device are its speed, sensitivity, resolution and cost. However, the choice of architecture is dependent on the application of the devices. While astronomy requires that maximum light being captured, price seldom being a constraint, full frame read-out architecture CCD devices are utilized. But in case of the point and shoot photography cameras where shutter speed and cost counts the most, interline architecture is the most popular.

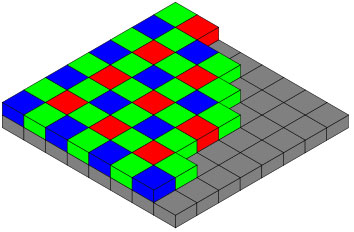

CCD arrays are only sensitive to intensity and not color. Thus, in order to obtain a color image, filters are used. Colored images may be obtained by using either the Bayer Pattern or 3CCD and a dichroic beam splitter prism.

Fig. 8: Reprentational Image of Bayer Pattern

Bayer Pattern is a special color grid which is placed over the imaging array. Human eyes being most sensitive to green color, two out of four grids have a green filter. The remaining have blue and red, comprising the RGB color pattern. A digital signal processor interpolates the two missing colors from the values of the neighboring pixels. Bayer pattern though simple and cheap, throws information away compromising on image resolution. Also, when used in places with abrupt changes in light intensity Bayer pattern creates artifacts. Some cameras may also use different color patterns for color generation too. Resolution can be improved in specific applications by Microscanning Technology.

A dichroic beam splitter prism splits the image into red, green and blue components which can then be incident separately on three-CCD devices. This offers higher quantum efficiency over Bayer Mask as most of the light is captured by the sensors and not absorbed by intermediate layers of the Mask. Such devices have been used in professional video camcorders.

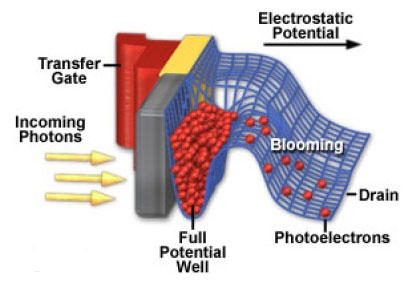

A CCD Device despite of all the good features it has to offer does have a few drawbacks too. Coupling of the charges along a row of many pixels results in the loss of considerable amount of charge resulting in fading. Improper shutter control, or over bright conditions leading to too many photons reaching the CCD element results in the leakage of charge to the neighboring pixel, which causes blooming.

Fig. 9: Graphical Image Representing Working/Diagram Showing Working of Dichroic Beam Splitter Prism

Further, if photons strike sensors while charge is being transferred due to improper clocking, data loss in the form of smears can happen. The sensitivity of a CCD device is often a function of the temperature of operation. As the temperature increases, the leakage dark current also increases, thereby reducing sensitivity. Noise is inherent to every electronics equipment and in case of devices based on CCD, the SNR degradation may take place due to photon noise, dark noise or read noise or the combination of them. However, intensive research work in this field has yielded positive results and the development of many improved variants. Devices like Electron Multiplying CCD (EMCCDs) which incorporate on-chip multiplication gain have helped achieve single photon detection sensitivities without compromising on resolution or efficiency of CCD structure while reducing costs. These features are otherwise typical of the costly Electron-Bombarded CCDs which utilizes an image intensifier with a CCD device.

CCD v/s CMOS

There has been neck to neck competition between CCD and CMOS imaging technologies. CCD and CMOS imagers were invented in the same era within a span of few years. Yet, CCDs became dominant because of the superior results from the then available fabrication technology. CMOS technologies focused on uniformity and smaller feature sizes, which did not quite happen until 1990s when lithography advanced enough to be support small feature sizes. It was after this that CMOS imagers had a comeback and since then both technologies have fought for market dominance. While CCD sensors are known to offer best image qualities, CMOS imagers offer more functions on the chip and attractive features like lesser power usage making them more popular in mobile phone cameras etc. A comparison can be drawn between the two on various aspects and a sound decision be made depending on the requirements of the application.

|

Factor

|

CCD

|

CMOS

|

|

Responsivity

|

Moderate

|

Higher

|

|

Dynamic Range

|

High

|

Moderate

|

|

Uniformity

|

High

|

Low

|

|

Speed

|

Moderate

|

Higher

|

|

Antiblooming

|

High

|

High

|

|

Signal out of pixel/chip

|

Electron Packet/Voltage

|

Voltage/Bits

|

|

System/Sensor Complexity

|

High/Low

|

Low/High

|

|

Noise

|

Low

|

High

|

Markets have seen rapid decline of the CCDs share owing to the growing popularity of CMOS sensors in cell phones and point and shoot cameras and even industry stalwarts like Canon and Sony who used CCD products primarily are now shifting to CMOS imagers. It is expected that more than 95% of the camera market will switch over to CMOS sensors by 2014. But there still remains and would remain a predominant segment which will continue to bank on CCD sensors, the scientific research and the astronomer community, the biggest example being the Hubble Space Telescope. So, while the light from the CCDs might be fading from the earth, we’d still need a CCD to see what’s out there.

Filed Under: Tech Articles

Questions related to this article?

👉Ask and discuss on EDAboard.com and Electro-Tech-Online.com forums.

Tell Us What You Think!!

You must be logged in to post a comment.