Altered Reality is the future of human-computer interaction as well of the human-reality interaction. Virtual reality, Augmented Reality and Mixed Reality are the terms often cited in context of the emerging interactive technologies particularly in the mobile domain. Of all these forms, augmented reality and mixed reality are considered altered realities. While mixed reality is relatively a new concept, Augmented Reality has been through many developments, applications and successful commercial ventures. In the wake of, all those possibilities where mobile based platforms and wearable embedded devices may find a point of convergence, it is important to consider AR like mobile technology developments seriously by any embedded engineer. Of course, Google Glasses and Microsoft Hololens are some popular products that are result of combining embedded electronics and mobile computing.

In a previous article – ‘Altered Realities – Virtual Reality, Augmented Reality & Mixed Reality’, the basic differences between all these forms of digital realities were discussed. This article revolves around the Augmented Reality and development of AR apps for mobile platforms (Android and IOS).

Often, Augmented Reality, Virtual Reality and Mixed Reality are mistaken to be visual technologies. However, all these digital realities involve visual as well as audio media as part of the generated reality. Augmented Reality means adding digital information to current perception of reality both visually and audibly. This additional digital information is obviously generated by a processor or computer. So basically, Augmented Reality superimpose virtual information on real-life real-time audible and visual information from the surrounding.

Visual Augmentation –

Augmented Reality essentially augments visual information (computer generated graphics and 3D images) to the current visual perception of the reality. The augmentation is obviously done on a display which can be either Optical-See-Through (OST) or Video-See-Through. The Optical-See-Through (OST) displays are semi-transparent display screens (Transparent OLEDs or teleprompter glasses) on which digital content can be projected by a processor. The present reality can be seen through the semi-transparent screen where digital content overlays the reality perceived through transparent screen. In case of OST displays, the visual augmentation happens directly on the retina of the human eye.

In many OST displays, the virtual content remains static like in the case of Google Glasses. Such OST displays are called Head Up Displays (HUD). HUD are not true AR displays as the virtual content in AR should overlay to fixed locations on the present reality and user movement must be tracked to show or hide merged virtual and real content in the surrounding space.

In case of Video-See-Through displays, the present reality is captured by a camera and displayed on a regular LCD or LED screen (of a mobile, computer or head mounted display) and the virtual content is overlaid to the present reality on the screen. Smart phone screens are the best example of Video-See-Through display. The LCD and LED monitors or televisions can also serve as VST displays for an AR application.

Fig. 1: Pokémon Go on Video-See-Through Display of a Mobile

The OST displays are not much in-use at present. However, such displays are often shown in Hollywood science-fiction movies. Well, Iron Man in Marvels Cinematic Universe can be frequently seen using OST monitors (transparent OLEDs) in the movie franchise.

Fig. 2: Transparent OLED showing Augmented Reality in Avengers Movie

Audio Augmentation –

Augmented Reality not just augments visual content but also the audio content. The audio from the present surrounding is picked using a microphone (like microphone of the smart phone) and is then mixed with computer generated audio and delivered to speakers or headphones. Audio Augmentation serves as important aspect in many AR applications. Like in the AR based navigation apps, audio augmentation can provide real-time voice assistance.

True Augmented Reality –

For majority of people, Google Glasses are the ultimate AR example but it is not the true AR product instead it is just an HUD. The digital reality technologies are just at their inception. That is why, the true nature of these, as imagined and aspired by the developers is usually not known and revealed to the masses (probably till AR and others, to their true form see the light of day). The true nature of Augmented Reality can be understood by the following factors –

AR is 3D :- Augmented Reality adds digital content to present reality. Human eyes see the world as 3-dimensional images. So, AR involves merging computer generated 3D images to the current perception of reality. A major challenge before AR is generation of high resolution 3D images that will look more life-like rather than being some animated stuff.

AR is Real-Time :- The current reality must be captured and merged with virtual content in real-time. In AR, virtuality and reality must be merged at every instant of time frame to frame and frame by frame. Any lag in capturing the reality or merging virtuality would fail to generate a true immersive experience.

AR must be interactive – The user must be able to interact with the altered reality generated through AR. The AR must be able to sense user movement (like location and movement of smart phone in mobile AR apps) and alter virtual content on screen accordingly. The user must also be able to interact with the virtual content like move, remove or explore virtual content.

Registration and Tracking –

Augmented Reality means adding virtual content to a real-time perception such that the virtual content seems to be inherent part of the reality. The process of capturing reality and superimposing virtual content to absolute positions in surrounding space is called ‘Registration’. The process of tracking user movement and altering the on-display content showing altered reality is termed ‘Tracking’. So, Registration let to know where things are in space and tracking let them move in or out of perception or let user explore things according to the user movements. The registration and tracking needs to be done in real-time. Like by registration in real-time, new things added to the present reality must be perceived at any instant of time immediately while tracking in real-time must let user interact with altered reality without any delays and interruptions.

Mobile AR –

The most common applications of Augmented Reality have been developed on the mobile platform. Mobile AR uses Video-See-Through display as smart phones capture reality from their embedded cameras and augment reality on their LCD or LED screens. The mobile AR is differentiated by the registration and tracking technique. There are two registration and tracking techniques used in mobile AR applications –

1) Sensor based AR (Location Based)

2) Computer Vision based AR

In Sensor based AR, the location (GPS) and Orientation (Accelerometer, Gyroscope and Magnetometers) sensors are used to track the current location and movements of the user. On the basis of the location and orientation information, the virtual content is registered in the physical reality. The location sensor is generally GNSS (Global Navigation Satellite System) receiver. The most popular GNSS receiver is GPS (Global Positioning System) maintained by United States. Other GNSS systems developed or under development include GLONASS (maintained by Russia), Galileo (under development by Europe to be launched by 2020), BeiDou Navigation Satellite System (BDS or BeiDou-2, under development by China to be launched by 2020), NAVigation with Indian Constellation (NAViC, under development by India) and Quasi-Zenith Satellite System (QZSS under development by Japan).

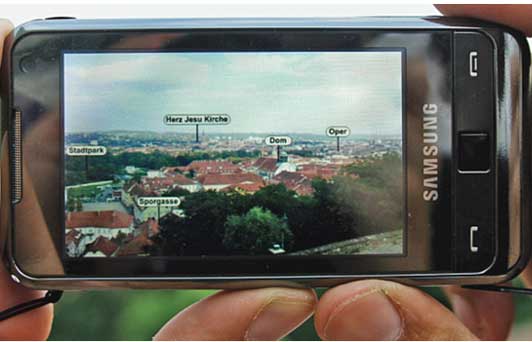

Popular mobile AR applications using sensor based AR include AR browsers (which let graphical information about the things around to show up by sensing user location and surrounding objects), Pokémon GO (AR based gaming app) and AR based navigation apps. However, sensor based AR technique is not much successful when the objects in reality are far from the line of sight or when the real objects in case may not necessarily have a fixed geographical location.

Fig .3: AR Browser using Sensor and Location Based Augmented Reality

The other AR technique used in mobile AR is computer vision based. In this technique, image processing is used to identify the surrounding objects and virtual content is registered to the reality based on the identification of the object. This can be marker based or marker less. Like many camera apps use marker based face identification to tag people or add virtual text or images to the captured image. While some AR based camera apps like Snapchat use marker less AR to identify faces and superimpose virtual stickers.

Fig. 4: Snapchat use Marker Less Vision Based Augmented Reality

Mobile AR Architecture –

Mobile AR apps have similar architecture as of any other mobile application. Any mobile AR app has an application layer, AR layer and Operating System layer. The application layer deals with generation of virtual content and its on-screen management. The AR layer deals with registration of the virtuality and reality and tracking of user movement. The operating system (OS) layer provides the tools and libraries for AR layer to interface with mobile platform. The OS layer does not provide any AR functionality but it enables the AR layer to work on a mobile platform. For example, display module in AR layer can access the mobile camera only through OS layer.

On android, Google Android API and JMonkey Engine form the operating system layer. On IOS, Core Services Layer provides the OS layer to an AR app.

How AR apps work –

The AR applications using Video-See-Through displays first access video from a camera and show the captured images on the screen. The camera parameters for the captured video are matched with the parameters of the virtual space. Then either sensor data is matched with the video parameters or image processing is used to identify objects in the captured video to locate and superimpose virtual content to fixed locations of the altered reality. Once the virtual content is correctly registered, the user movements are tracked to alter virtual content. In case of Optical-See-Through displays, the only difference remains that only virtual content is rendered on the screen though the reality is still captured by a camera. This is the main control loop of any AR application weather it is developed for mobile platform, desktop based systems or for gadgets equipped with compact embedded electronics. This control loop forms the main activity of the AR apps in case of mobile AR. This control loop repeats infinitely till the AR application runs.

The electronic components essentially required for computer vision based AR development are camera, processor that can run an operating system and a display screen (Like LCD, LED or Transparent OLED). The processor and operating system must be capable of rending 3D images in real-time apart from capturing video and displaying the superimposed video content on display screen. For sensor based AR development, sensors like accelerometer, gyroscope and magnetometer are additionally required.

The smart phones come with embedded camera, efficient processors, sufficient RAM and storage, have display screens and come equipped with multiple sensors. So, it is easy to start developing AR applications on mobile platform. The mobile phones are also portable, so there can be wide scope for AR applications when developed on a mobile platform. While the exponential rise in the sales of mobile phones have offered a big consumer market for app developers, the continuous software development in the mobile domain has made ready to use libraries and software development tools available easing and accelerating the AR application development on mobile platform.

Some dedicated AR applications can also be developed on desktop platforms with the help of a desktop system, webcam and projectors or monitors. However such desktop based AR application development will require customized software development environment and writing of several core modules and software libraries from scratch. Also, for sensor based AR development, custom electronic modules will then required to be designed that could interface with the desktop computing system either through Wi-Fi, Bluetooth or internet. Dedicated desktop based AR applications can be developed for home, offices, education and industrial environments.

For beginning AR application development, mobile platform is the most suitable considering ease to start off with and scope for commercial returns of the entire venture. The desktop or embedded platforms can be chosen for niche AR applications that may require a dedicated electronic setup and entirely different sophisticated software development.

Filed Under: Articles

Questions related to this article?

👉Ask and discuss on Electro-Tech-Online.com and EDAboard.com forums.

Tell Us What You Think!!

You must be logged in to post a comment.